今更だけど、覚書。だいぶ前にガーンと作った。あとで更新版をつくるけどまずは雰囲気だけ。

ネットワーク設定

フラットネットワークでよい。192.168.11.0/24

構成

フラットなネットワークにすべてをぶら下げたもの。VLANなどの設定もなし。

構成

フラットなネットワークにすべてをぶら下げたもの。VLANなどの設定もなし。

——————————————192.168.11.0/24

| |

Node1/Node2/Node3 Default Gateway Router

ただし、podのブラウザでアクセスをさせるためには、上位ルータで10.244.0.0/16をnode1(192.168.10.31)にstatic routeを切る必要がある。このドキュメントはすべてコマンドラインで行うため、ブラウザでのアクセスは不要

Kubernetesノードの設定、基本インストール

CentOS 7 (物理でも仮想でもよい)

2vCPU 4GB HDD 20GB 1NIC で3VMを構築

ent1-node1.ent1.cloudshift.corp 192.168.11.181 (Kubernetesのマスターノード)

ent1-node1.ent2.cloudshift.corp 192.168.11.182 (Kubernetesのスレーブノード)

ent1-node1.ent3.cloudshift.corp 192.168.11.183 (Kubernetesのスレーブノード)

CentOS7のminimumインストール

以下はすべてのノードで行う。

インストールメディアは、CentOS-7-x86_64-Minimal-2003.iso

ネットワークインタフェース

スタティックのIP/ネットマスク、ゲートウェイの手動設定

DNSは、8.8.8.8

ホスト名を正しく設定しておく

CentOS7のインストール後

以下のコマンドを実行

# 環境変数の設定

cat << EOF > /etc/environment

LANG=en_US.utf-8

LC_ALL=en_US.utf-8

EOF

source /etc/environment

# SElinuxの無効化

sed -i”.orig” -e “s/SELINUX=enforcing/SELINUX=permissive/g” /etc/selinux/config

setenforce 0

# ホスト名の設定

# DNSを使わないのでHostで名前解決

cat <<EOF >> /etc/hosts

192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp ent1-k8snode1

192.168.11.182 ent1-k8snode2.ent1.cloudshift.corp ent1-k8snode2

192.168.11.183 ent1-k8snode3.ent1.cloudshift.corp ent1-k8snode3

EOF

cat /etc/hosts

# タイムゾーンの設定

# タイムゾーンの一覧表示

# timedatectl list-timezones

# タイムゾーンをUTCにする場合

# timedatectl set-timezone UTC

timedatectl set-timezone Asia/Tokyo

date

# NTP サーバの設定

sed -i”.orig” -e “/centos.pool.ntp.org/d” /etc/chrony.conf && echo “server 192.168.8.4 iburst” >> /etc/chrony.conf

systemctl restart chronyd.service && systemctl enable chronyd.service

chronyc sources

# サービスの設定

systemctl disable firewalld && systemctl stop firewalld

# インターネットの接続性の確認

ping -c 3 packages.cloud.google.com

# 最低限必要なパッケージのインストール

lspci | grep VMware >/dev/null && yum -y install open-vm-tools wget git

# yum update

yum -y update

# 通信設定

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# fstabに記載されているSwapを無効に(vi使わず、置換)

sed -i”.orig” -e “s|/dev/mapper/centos-swap|#/dev/mapper/centos-swap|g” /etc/fstab

# Kubernetsのレポジトリ追加

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# 再起動

reboot

# DockerとKubernetsのインストール

yum -y update

yum install -y docker kubelet kubeadm kubectl kubernetes-cni

yum clean all

systemctl enable docker && systemctl start docker

systemctl enable kubelet && systemctl start kubelet

Kubernetes環境の作成

マスターノード(node1)で以下を実行

cd ~/

kubeadm init –pod-network-cidr=10.244.0.0/16 –apiserver-advertise-address 192.168.11.181 –service-cidr 192.168.11.0/24

10.244.0.0/16は、あとでインストールするflannelで予め設定されているアドレス

—表示結果–

W0521 09:48:02.506278 9875 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using ‘kubeadm config images pull’

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder “/etc/kubernetes/pki”

[certs] Generating “ca” certificate and key

[certs] Generating “apiserver” certificate and key

[certs] apiserver serving cert is signed for DNS names [ent1-k8snode1.ent1.cloudshift.corp kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [192.168.11.1 192.168.11.181]

[certs] Generating “apiserver-kubelet-client” certificate and key

[certs] Generating “front-proxy-ca” certificate and key

[certs] Generating “front-proxy-client” certificate and key

[certs] Generating “etcd/ca” certificate and key

[certs] Generating “etcd/server” certificate and key

[certs] etcd/server serving cert is signed for DNS names [ent1-k8snode1.ent1.cloudshift.corp localhost] and IPs [192.168.11.181 127.0.0.1 ::1]

[certs] Generating “etcd/peer” certificate and key

[certs] etcd/peer serving cert is signed for DNS names [ent1-k8snode1.ent1.cloudshift.corp localhost] and IPs [192.168.11.181 127.0.0.1 ::1]

[certs] Generating “etcd/healthcheck-client” certificate and key

[certs] Generating “apiserver-etcd-client” certificate and key

[certs] Generating “sa” key and public key

[kubeconfig] Using kubeconfig folder “/etc/kubernetes”

[kubeconfig] Writing “admin.conf” kubeconfig file

[kubeconfig] Writing “kubelet.conf” kubeconfig file

[kubeconfig] Writing “controller-manager.conf” kubeconfig file

[kubeconfig] Writing “scheduler.conf” kubeconfig file

[control-plane] Using manifest folder “/etc/kubernetes/manifests”

[control-plane] Creating static Pod manifest for “kube-apiserver”

[control-plane] Creating static Pod manifest for “kube-controller-manager”

W0521 09:48:54.393610 9875 manifests.go:225] the default kube-apiserver authorization-mode is “Node,RBAC”; using “Node,RBAC”

[control-plane] Creating static Pod manifest for “kube-scheduler”

W0521 09:48:54.394576 9875 manifests.go:225] the default kube-apiserver authorization-mode is “Node,RBAC”; using “Node,RBAC”

[etcd] Creating static Pod manifest for local etcd in “/etc/kubernetes/manifests”

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory “/etc/kubernetes/manifests”. This can take up to 4m0s

[apiclient] All control plane components are healthy after 21.502004 seconds

[upload-config] Storing the configuration used in ConfigMap “kubeadm-config” in the “kube-system” Namespace

[kubelet] Creating a ConfigMap “kubelet-config-1.18” in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see –upload-certs

[mark-control-plane] Marking the node ent1-k8snode1.ent1.cloudshift.corp as control-plane by adding the label “node-role.kubernetes.io/master=””

[mark-control-plane] Marking the node ent1-k8snode1.ent1.cloudshift.corp as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 8y6dj6.vjurjjk269oy6i1j

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the “cluster-info” ConfigMap in the “kube-public” namespace

[kubelet-finalize] Updating “/etc/kubernetes/kubelet.conf” to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.11.181:6443 –token 8y6dj6.vjurjjk269oy6i1j \

–discovery-token-ca-cert-hash sha256:90bddb2f5786b0a54fc97ba8ca35a5987df714993c421738492e1158542eb97a

————

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

flannelの導入

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl get pods –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66bff467f8-8wrj2 0/1 Running 0 77s

kube-system coredns-66bff467f8-fntzj 1/1 Running 0 77s

kube-system etcd-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 90s

kube-system kube-apiserver-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 90s

kube-system kube-controller-manager-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 90s

kube-system kube-flannel-ds-amd64-gs2lk 1/1 Running 0 36s

kube-system kube-proxy-72mqz 1/1 Running 0 77s

kube-system kube-scheduler-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 90s

スレーブノード(node2,node3)の構築

Node2,Node3で以下を実行。node1のkubeadminの実行結果の最後にあるコマンドを実行

kubeadm join 192.168.11.181:6443 –token g05oz7.xh19nfex2lprxn2k \

–discovery-token-ca-cert-hash sha256:1bd007ecfc178e49ef9a5797ff6275deb932b17ffd2865dde5cf4a6156003a59

node2,node3には、アクセスしないので、ログオフしてもいい。

以後node1での操作

ラベルの設定

kubectl get node

NAME STATUS ROLES AGE VERSION

ent1-k8snode1.ent1.cloudshift.corp Ready master 3m15s v1.18.3

ent1-k8snode2.ent1.cloudshift.corp NotReady <none> 22s v1.18.3

ent1-k8snode3.ent1.cloudshift.corp NotReady <none> 21s v1.18.3

kubectl label node ent1-k8snode2.ent1.cloudshift.corp node-role.kubernetes.io/router=

kubectl label node ent1-k8snode3.ent1.cloudshift.corp node-role.kubernetes.io/worker=

kubectl get node

NAME STATUS ROLES AGE VERSION

ent1-k8snode1.ent1.cloudshift.corp Ready master 7m36s v1.18.3

ent1-k8snode2.ent1.cloudshift.corp Ready router 4m43s v1.18.3

ent1-k8snode3.ent1.cloudshift.corp Ready worker 4m42s v1.18.3

kubectl get pod –all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-66bff467f8-8wrj2 1/1 Running 0 3m18s 10.244.0.2 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system coredns-66bff467f8-fntzj 1/1 Running 0 3m18s 10.244.0.3 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system etcd-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 3m31s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system kube-apiserver-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 3m31s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system kube-controller-manager-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 3m31s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system kube-flannel-ds-amd64-gs2lk 1/1 Running 0 2m37s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system kube-flannel-ds-amd64-sllgv 1/1 Running 0 46s 192.168.11.183 ent1-k8snode3.ent1.cloudshift.corp <none> <none>

kube-system kube-flannel-ds-amd64-x7pcv 1/1 Running 0 47s 192.168.11.182 ent1-k8snode2.ent1.cloudshift.corp <none> <none>

kube-system kube-proxy-72mqz 1/1 Running 0 3m18s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

kube-system kube-proxy-dndrf 1/1 Running 0 46s 192.168.11.183 ent1-k8snode3.ent1.cloudshift.corp <none> <none>

kube-system kube-proxy-pkwdt 1/1 Running 0 47s 192.168.11.182 ent1-k8snode2.ent1.cloudshift.corp <none> <none>

kube-system kube-scheduler-ent1-k8snode1.ent1.cloudshift.corp 1/1 Running 0 3m31s 192.168.11.181 ent1-k8snode1.ent1.cloudshift.corp <none> <none>

以降、metricサーバとかインストールしていく。以下工事中。

トラブルシューティング

<デプロイ出来ない場合>

[root@node1 ~]# kubectl get pods -n kube-system -o=wide

NAME READY STATUS RESTARTS AGE IP NODE

dummy-2088944543-p4wtp 1/1 Running 0 9m 192.168.10.31 node1.example.local

etcd-node1.example.local 1/1 Running 0 9m 192.168.10.31 node1.example.local

kube-apiserver-node1.example.local 1/1 Running 0 9m 192.168.10.31 node1.example.local

kube-controller-manager-node1.example.local 1/1 Running 0 9m 192.168.10.31 node1.example.local

kube-discovery-1769846148-6c3bl 1/1 Running 0 9m 192.168.10.31 node1.example.local

kube-dns-2924299975-xl635 4/4 Running 0 9m 10.244.1.2 node2.example.local

kube-proxy-f8sn1 1/1 Running 0 2m 192.168.10.33 node3.example.local

kube-proxy-psdz7 1/1 Running 0 2m 192.168.10.32 node2.example.local

kube-proxy-wx780 1/1 Running 0 9m 192.168.10.31 node1.example.local

kube-scheduler-node1.example.local 1/1 Running 0 9m 192.168.10.31 node1.example.local

[root@node1 ~]# kubectl describe pod kube-dns-2924299975-xl635 –namespace=kube-system

表示されるコメントを確認

<kubernetesのリセット、再構築、アンインストールをしたい場合>

<Node1>

kubeadm reset

rm -rf .kube/

<node2,node3>

systemctl stop kubelet;

docker rm -f $(docker ps -q); mount | grep “/var/lib/kubelet/*” | awk ‘{print $3}’ | xargs umount 1>/dev/null 2>/dev/null;

rm -rf /var/lib/kubelet /etc/kubernetes /var/lib/etcd /etc/cni;

ip link set cbr0 down; ip link del cbr0;

ip link set cni0 down; ip link del cni0;

systemctl start kubelet

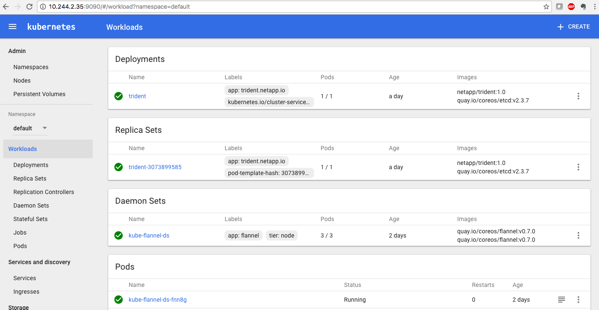

おまけ

UIが欲しい。

kubectl create -f https://rawgit.com/kubernetes/dashboard/master/src/deploy/kubernetes-dashboard.yaml

kubectl config set-credentials cluster-admin –username=admin –password=Netapp1!

kubectl get pods –all-namespaces -o=wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default kube-flannel-ds-fnn8g 2/2 Running 0 2d 192.168.10.32 node2.example.local

default kube-flannel-ds-hz84h 2/2 Running 0 2d 192.168.10.31 node1.example.local

default kube-flannel-ds-p9k3b 2/2 Running 0 2d 192.168.10.33 node3.example.local

default nginx-pod 1/1 Running 0 20h 10.244.2.32 node3.example.local

default trident-3073899585-wlhdq 2/2 Running 1 1d 10.244.2.23 node3.example.local

kube-system dummy-2088944543-p4wtp 1/1 Running 0 2d 192.168.10.31 node1.example.local

kube-system etcd-node1.example.local 1/1 Running 0 2d 192.168.10.31 node1.example.local

kube-system kube-apiserver-node1.example.local 1/1 Running 0 2d 192.168.10.31 node1.example.local

kube-system kube-controller-manager-node1.example.local 1/1 Running 1 2d 192.168.10.31 node1.example.local

kube-system kube-discovery-1769846148-6c3bl 1/1 Running 0 2d 192.168.10.31 node1.example.local

kube-system kube-dns-2924299975-xl635 4/4 Running 0 2d 10.244.1.2 node2.example.local

kube-system kube-proxy-f8sn1 1/1 Running 0 2d 192.168.10.33 node3.example.local

kube-system kube-proxy-psdz7 1/1 Running 0 2d 192.168.10.32 node2.example.local

kube-system kube-proxy-wx780 1/1 Running 0 2d 192.168.10.31 node1.example.local

kube-system kube-scheduler-node1.example.local 1/1 Running 1 2d 192.168.10.31 node1.example.local

kube-system kubernetes-dashboard-3203831700-dk7jm 1/1 Running 0 3h 10.244.2.35 node3.example.local

http://10.244.2.35:9090/へアクセスすると。。。